Explainable AI

Causal explanation of autonomous vehicle decision-making

Artificial Intelligence (AI) shows promising applications for the perception and planning tasks in autonomous driving (AD) due to its superior performance compared to conventional methods. However, inscrutable AI systems exacerbate the existing challenge of safety assurance of AD. One way to mitigate this challenge is to utilize explainable AI (XAI) techniques.

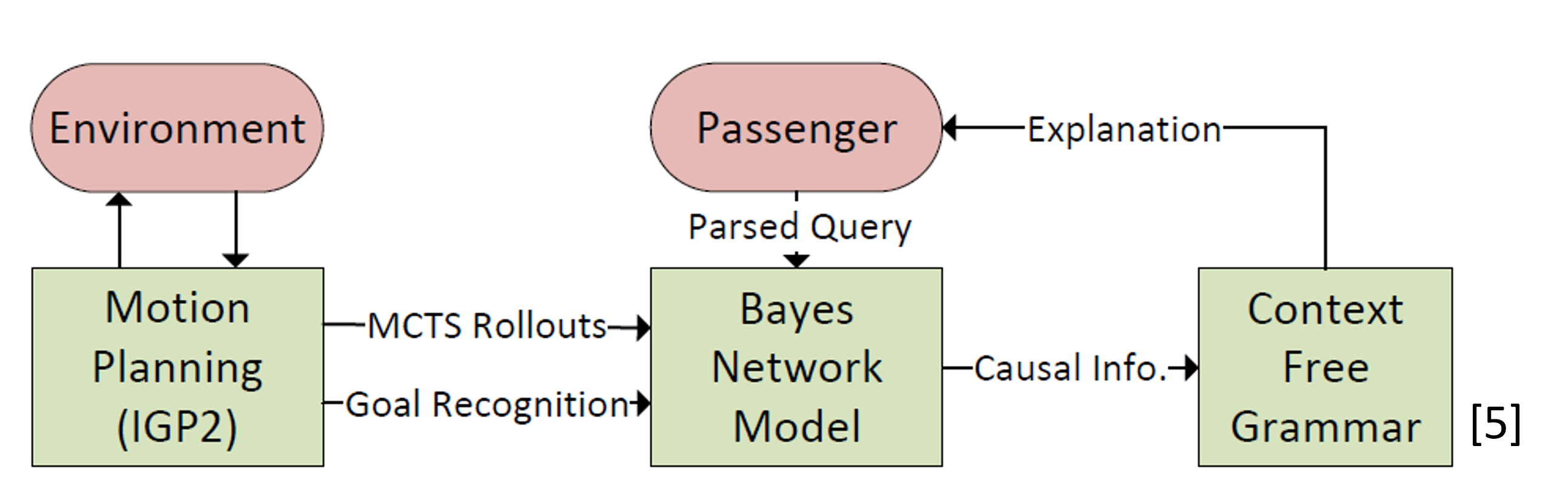

To this end, this project aims to develop novel methods to explain AI-driven decisions in AD to various stakeholders using intelligible language. Until now, we have obtained the following milestones for explainaing a Monte Carlo Tree Search (MCTS)-based decision-making:

1, A human-centric method for generating causal explanations in natural language for autonomous vehicle motion planning [paper][video]

2, Causal Social Explanations for Stochastic Sequential Multi-Agent Decision-Making [paper]

To evaluate the our method’s performance, we also conduct user study. The collected data could be found here if you are interested in human explanations for AD decision-making. Moreover, our work can also be found at FIVE AI.